Keyword [LGRAN]

Wang P, Wu Q, Cao J, et al. Neighbourhood watch: Referring expression comprehension via language-guided graph attention networks[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2019: 1960-1968.

1. Overview

1.1. Motivation

- Mattnet. language and region features are learned or designed independently without being informed by each other.

In this paper, it proposes Language-guided Graph Attention Network (LGRAN)

1) Language Self-attention Module

2) Language-guided Graph Attention (node attention & edge attention)

Make referring expression decision both visualisable and explainable.

- directed graph

- node. object set of proposals or GTs

- edge

- intra-class edge. spatial relationship

- inter-class edge. spatial relationship + other objects’ visual feature

1.2. Dataset

- RefCOCO

- RefCOCO+

- RefCOCOg

2. LGRAN

2.1. Problem

1) Given an image $I$, localise the object $o’$ referred to by r from the object set $O={o_i}, i=1,…,N$ of $I$.

2) $O$ is given as GT or obtained by an proposal generation method.

2.2. Language Self-Attention Module

Output

1) $s_{sub}$, $w_{sub}$

2) $s_{intra}$, $w_{intra}$

3) $s_{inter}$, $w_{inter}$

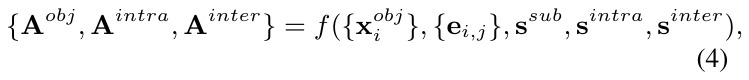

2.3. Language-guided Graph Attention Module

2.3.1. Graph Construction

1) Node set. $V={v_i}, i=1,…,N$

2) Edge set. $E^{intra}$, $E^{inter}$

3) intra-class edge set. top $k$ objs based on distance.

4) inter-class edge set. top $k$ other objs based on distance.

5) $k=5$

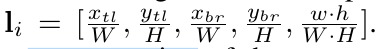

6) Node $v_i$. img feature $v_i$ (512) + $l_i$ (5)

7) $e_{ij}$

8) $x_c$. centre coordinate

2.3.2. Language-guided Graph attention

the Node Attention

the Intra-class Edge Attention

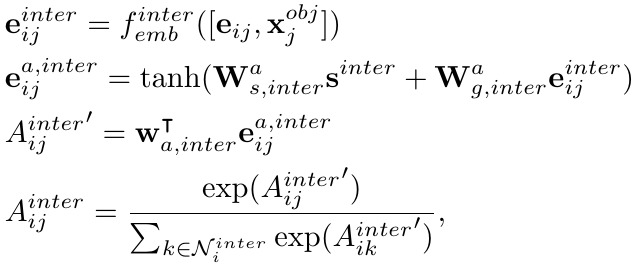

the Inter-class Edge Attention

2.3.3 The Attended Graph Representation

2.3.4 Matching Module

CrossEntropy

3. Experiments

- IOU > 0.5. prediction is true